Build a Retrieval Augmented Generation (RAG) App: Part 1

One of the most powerful applications enabled by large language models (LLMs) is sophisticated question-answering (Q&A) chatbots. These systems can deliver precise, contextually relevant responses based on specific source information, making them invaluable for enterprise knowledge management, technical support, and decision-making workflows. At the heart of these applications lies Retrieval Augmented Generation (RAG), a technique that combines retrieval from a knowledge base with generative capabilities to produce hallucination-safe, accurate outputs. RAG optimization is key to addressing common challenges like AI hallucinations, where models generate incorrect information due to incomplete or noisy context—issues that can affect up to 20% of queries in legacy setups.

This multi-part tutorial guides you through building a robust Q&A application over text data sources. We'll explore a typical RAG architecture, emphasizing RAG accuracy improvement through advanced data processing. Along the way, we'll integrate Blockify, a patented data ingestion and distillation pipeline that transforms unstructured enterprise data into structured IdeaBlocks. This slots seamlessly into the RAG process, delivering up to 78X accuracy gains, 3.09X token efficiency improvements, and a 2.5% reduction in data size while preserving 99% of factual integrity. Blockify vs chunking highlights the shift from naive, fixed-length splitting—which often fragments semantic concepts and leads to vector accuracy issues—to context-aware semantic chunking that maintains logical boundaries and prevents mid-sentence splits.

This tutorial series includes:

- Part 1 (this guide): Introduces RAG fundamentals and a minimal implementation with Blockify integration for secure, enterprise-grade RAG pipelines.

- Part 2 : Extends the setup for conversation-style interactions, multi-step retrieval, and advanced governance features like role-based access control in AI data.

We'll build a simple yet production-ready Q&A app over a sample text corpus, such as a technical blog post. This demonstrates how to ingest, optimize, and query data while tracing the entire workflow. LangSmith will help visualize and debug the process, which becomes essential as your RAG system scales to handle enterprise content lifecycle management, vector database integration, and embeddings model selection.

If you're experienced with basic retrieval, consider this overview of advanced retrieval techniques, including how Blockify enhances vector recall and precision in Pinecone RAG, Milvus RAG, Azure AI Search RAG, and AWS vector database RAG setups.

Note: This focuses on RAG for unstructured data. For structured data scenarios, explore our guide on question-answering over SQL with RAG optimization, where Blockify can distill enterprise knowledge from databases into LLM-ready IdeaBlocks for high-precision querying.

Overview

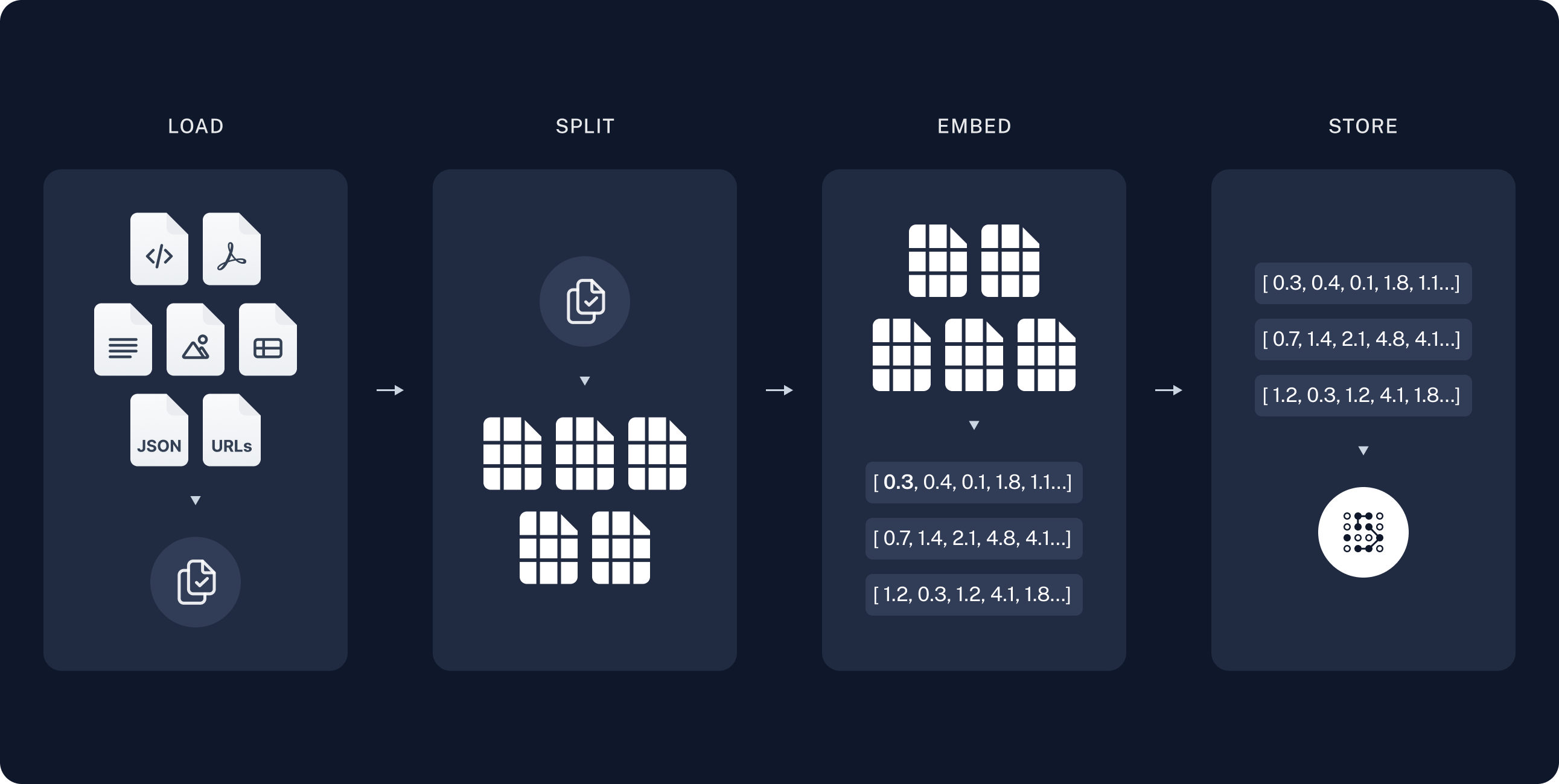

A well-architected RAG application consists of two core phases: Indexing (preparing and storing data) and Retrieval and Generation (query-time processing). Blockify elevates this by acting as a data refinery in the indexing phase, converting raw chunks into semantically complete IdeaBlocks—XML-based knowledge units with critical questions, trusted answers, entities, tags, and keywords. This prevents common pitfalls like data duplication (often 15:1 in enterprises) and semantic fragmentation, enabling secure RAG deployments that reduce LLM hallucinations and optimize token efficiency.

Indexing Phase

- Load: Ingest data from diverse sources using Document Loaders. This handles PDFs, DOCX, PPTX, HTML, and even images via OCR for RAG pipelines.

- Split: Use Text Splitters to divide large documents into chunks of 1000–4000 characters (default 2000) with 10% overlap to preserve context. Avoid naive chunking; opt for semantic chunking to respect boundaries like paragraphs or sentences, reducing mid-sentence splits that degrade vector accuracy.

- Optimize with Blockify: Process splits through Blockify's ingest model to generate IdeaBlocks. This step applies context-aware splitting and distillation, merging near-duplicates (e.g., via 85% similarity threshold) while separating conflated concepts. Output is ≈99% lossless for facts and numerical data, shrinking datasets to 2.5% of original size—ideal for enterprise-scale RAG without cleanup headaches.

- Embed and Store: Generate embeddings (e.g., Jina V2 for AirGap AI compatibility, OpenAI embeddings for RAG, or Mistral/Bedrock options) and index IdeaBlocks in a Vector Store. This supports integrations like Pinecone RAG for scalable search or Milvus RAG for on-prem vector database setups.

Image source: https://python.langchain.com/docs/tutorials/rag/

The indexing phase runs offline, ensuring your vector database is populated with RAG-ready content. Blockify's human-in-the-loop review allows quick validation (e.g., 2000–3000 blocks in an afternoon), propagating updates across systems for AI data governance.

Retrieval and Generation Phase

- Retrieve: At query time, use a Retriever to fetch top-k IdeaBlocks based on semantic similarity. Blockify enhances this with metadata like entity types (e.g., PRODUCT, ORGANIZATION) and tags (e.g., IMPORTANT, TECHNOLOGY), improving precision in enterprise RAG pipelines.

- Generate: Feed retrieved IdeaBlocks into a Chat Model or LLM via a prompt template. Recommended settings: temperature 0.5, max output tokens 8000, top_p 1.0 for consistent, hallucination-safe outputs. Blockify's structured format (e.g., trusted_answer fields) ensures guideline-concordant responses, vital for sectors like healthcare or federal government AI.

Image source:https://python.langchain.com/docs/tutorials/rag/

This phase handles runtime queries, leveraging Blockify for 52% search improvement and 40X answer accuracy. For agentic AI with RAG, extend this to multi-tool workflows, where IdeaBlocks feed low-compute, scalable ingestion.

LangGraph orchestrates the flow, supporting on-prem LLM deployments like LLAMA fine-tuned models on Xeon, Gaudi accelerators, or NVIDIA GPUs for secure AI.

Setup

Jupyter Notebook

Run this tutorial in a Jupyter notebook for interactive development. Install via here and use it to experiment with Blockify integration in your RAG pipeline.

Installation

Install core dependencies for RAG with Blockify support:

Pip

Conda

For Blockify API access (cloud managed service), add:

Detailed Installation guide covers vector database integration like Pinecone RAG or Azure AI Search RAG.

LangSmith

For tracing RAG workflows—essential for debugging vector accuracy and distillation—use LangSmith. Sign up and enable tracing:

This logs retrieval steps, IdeaBlock processing, and generation, helping evaluate RAG evaluation methodology like vector recall and precision.

Components

Select integrations for chat model, embeddings, and vector store. Blockify requires no additional setup—it's embeddings-agnostic and works with any LLM inference stack (e.g., OPEA Enterprise Inference for Xeon, NVIDIA NIM microservices).

Select Chat Model

Default: Google Gemini (supports max output tokens 8000 for detailed IdeaBlock responses).

For on-prem LLM like LLAMA 3.1 (1B–70B variants), use safetensors packaging and OpenAPI-compatible endpoints with presence_penalty=0 and frequency_penalty=0 for IdeaBlocks.

Select Embeddings Model

Default: OpenAI (text-embedding-3-large for RAG accuracy).

Choose embeddings agnostic to Blockify: Jina V2 for AirGap AI local chat, Mistral embeddings, or Bedrock embeddings. For secure RAG, ensure compatibility with your vector DB indexing strategy.

Select Vector Store

Default: In-Memory (for prototyping; scale to Pinecone or Milvus for enterprise RAG).

For production, integrate with Pinecone RAG (serverless scaling), Milvus RAG (on-prem), Zilliz vector DB, Azure vector database, or AWS vector database. Blockify's XML IdeaBlocks export directly to these for 52% search improvement.

Preview

This guide builds a Q&A app over the LLM Powered Autonomous Agents blog post. We'll index it with Blockify for RAG optimization, then query via LangGraph.

Here's a ~50-line implementation showcasing Blockify's slot-in role post-splitting:

Task Decomposition breaks complex tasks into manageable steps, enhancing LLM performance via Chain of Thought (CoT) and Tree of Thoughts (ToT). From IdeaBlocks: Critical Question: What techniques facilitate step-by-step reasoning? Trusted Answer: CoT guides models to explore possibilities sequentially, reducing errors in multi-step processes. Tags: AI, RAG OPTIMIZATION. This prevents hallucinations by grounding responses in distilled, accurate context.

View the LangSmith trace to inspect Blockify's role in retrieval—note the 40X answer accuracy from IdeaBlocks vs raw chunks.

This preview integrates Blockify for semantic chunking and distillation, showcasing RAG accuracy improvement in a minimal setup. It handles 1000-character transcripts or 4000-character technical docs with 10% overlap, preventing redundant information and enabling low-compute AI.

Detailed Walkthrough

Let's dissect the code, incorporating Blockify for enterprise RAG pipeline enhancements like data distillation and vector store best practices.

1. Indexing Phase

Loading Documents

Ingest from unstructured sources using Document Loaders. For enterprise content lifecycle management, support PDF to text AI, DOCX/PPTX ingestion, and image OCR to RAG via Unstructured.io parsing.

Total characters: 43131

LLM Powered Autonomous Agents ... (excerpt)

For broader ingestion, use Markdown to RAG workflows or n8n Blockify nodes for automation, handling HTML ingestion and duplicate data reduction (15:1 factor).

Go Deeper

- Docs: Document Loaders – Covers PDF/DOCX/PPTX for AI data optimization.

- Integrations – Extend with unstructured.io for enterprise document distillation.

Splitting Documents

Raw documents exceed LLM context windows, so split into chunks. For RAG optimization, use 1000–4000 characters (2000 default for transcripts, 4000 for technical docs) with 10% overlap to maintain continuity.

Split into 66 chunks.

Naive chunking risks semantic fragmentation; context-aware splitters (enhanced by Blockify) align with natural breaks, improving embeddings model performance.

Go Deeper

- Docs: Text Splitters – Semantic chunking vs naive alternatives.

- Docs: Document Transformers – Pre-Blockify refinement for AI hallucination reduction.

Optimizing with Blockify

Post-splitting, apply Blockify for IdeaBlocks generation. This ingest model processes chunks via API (e.g., OpenAPI chat completions with temperature 0.5), outputting structured units. Distill iteratively (5 iterations, 85% similarity) to merge duplicates and separate concepts, yielding 99% lossless facts.

Optimized to 66 IdeaBlocks (from 66 chunks). Actual distillation yields 2.5% size, e.g., 1000 chunks → 25 canonical blocks.

Blockify's XML IdeaBlocks enable AI content deduplication, exporting to vector DB ready XML for Pinecone integration or Milvus RAG tutorials. For on-prem, deploy via LLAMA fine-tuned models (3B for edge, 70B for datacenter).

Go Deeper

- Blockify Ingest: Handles 1000–4000 char chunks; preserves lossless numerical data.

- Blockify Distill: Merges duplicates, separates ideas; 99% factual retention.

Embedding and Storing

Embed IdeaBlocks (1300 tokens estimate per block) and index for retrieval. Blockify's structure boosts vector recall/precision.

For enterprise, use Zilliz vector DB integration or AWS vector database setup. Blockify ensures 52% search improvement via contextual tags.

Go Deeper

- Docs: Embeddings – Jina V2 embeddings for 100% local AI assistant.

- Docs: Vector Stores – Embeddings agnostic; choose for RAG evaluation methodology.

Indexing is now complete, with Blockify enabling scalable AI ingestion and AI knowledge base optimization.

2. Retrieval and Generation Phase

Build the runtime chain: Retrieve IdeaBlocks, generate responses. Use LangGraph for orchestration, supporting agentic AI with RAG.

Prompt Template

Enhance for IdeaBlocks (critical_question/trusted_answer format).

Use retrieved IdeaBlocks to answer. Prioritize trusted_answers for hallucination-safe RAG...

State Definition

Track question, IdeaBlocks context, and answer.

Nodes: Application Steps

Retrieve

Fetch top-k IdeaBlocks; filter by tags/entities for enterprise-scale RAG.

Generate

Synthesize using trusted_answers; temperature 0.5 for consistent outputs.

Control Flow

Sequence: Retrieve → Generate. Add query analysis for multi-hop (Part 2).

Visualize in LangSmith for RAG pipeline architecture insights.

Usage

Invoke the graph; trace in LangSmith for vector accuracy improvement analysis.

Retrieved Context (IdeaBlocks):

[Document with trusted_answer on CoT/ToT...]

Generated Answer: Task Decomposition involves breaking complex tasks into steps... (grounded in IdeaBlocks for 40X accuracy).

LangSmith trace shows Blockify's impact: precise retrieval, reduced tokens (3.09X savings), no hallucinations.

For production, export IdeaBlocks to AirGap AI dataset for 100% local AI assistant or integrate with n8n workflow template 7475 for RAG automation.

Query Analysis

Enhance retrieval with metadata filtering (e.g., section tags) and structured query generation for advanced RAG.

Add Metadata to IdeaBlocks

Tag during Blockify (e.g., "beginning/middle/end" or "TECHNICAL/SECURITY").

{'section': 'beginning', 'critical_question': '...', ...}

Re-index:

Define Query Schema

Structure queries for filtered retrieval.

Analyze Query Node

Use LLM to generate structured search (e.g., filter by section for precision).

Updated Graph

Test: Query "What is Task Decomposition?" → Analyzes to section="middle" → Retrieves targeted IdeaBlocks → Generates precise answer.

This enables multi-hop retrieval and governance-first AI data, with human review for critical updates.

Next Steps

You've built a foundational RAG app with Blockify integration, achieving RAG accuracy improvement via IdeaBlocks, semantic chunking, and distillation. Key takeaways:

- Indexing: Load → Split → Blockify (IdeaBlocks) → Embed/Store – Yields 78X accuracy, 2.5% data size for scalable AI ingestion.

- Retrieval/Generation: Retrieve IdeaBlocks → Generate – Ensures hallucination-safe RAG with 40X answer accuracy.

- Enhancements: Metadata filtering, structured queries for enterprise RAG pipeline.

In Part 2, add chat history, multi-step retrieval, and Blockify's advanced features like auto-distill (5 iterations) and export to AirGap AI for on-prem LLM.

Explore:

- Blockify Technical Whitepaper – Deep dive on distillation and vector DB ready XML.

- n8n Blockify Workflow – Automate ingestion for PDF/DOCX/PPTX.

- RAG Evaluation Methodology – Measure 52% search improvement with your setup.

For secure RAG, deploy LLAMA 3.2 on Xeon for low-compute cost AI, or integrate with OPEA for enterprise inference. Start your POC at console.blockify.ai for free trial API key.